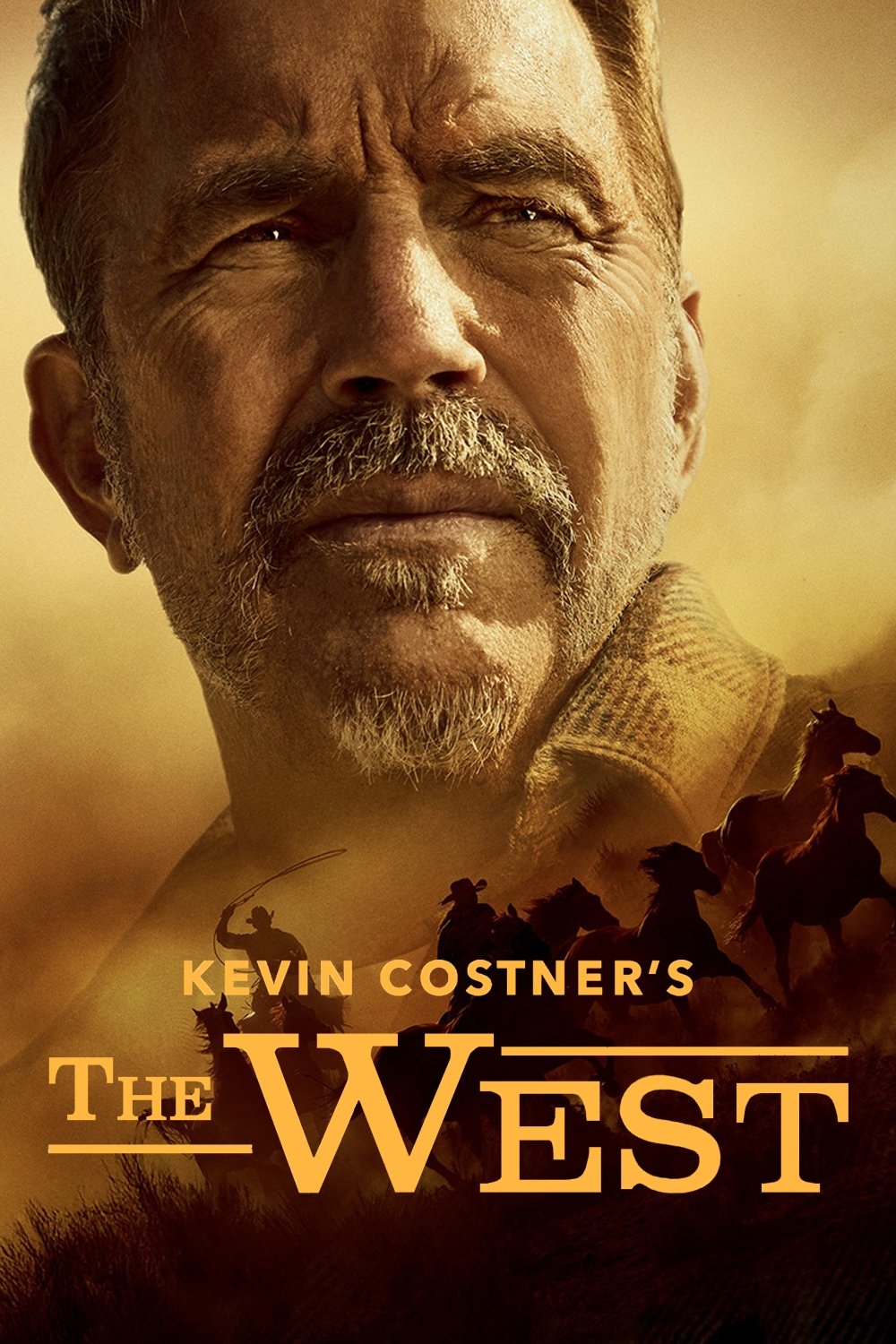

Kevin Costner's The West

This fresh look at the epic history of the American West delves into the desperate struggle for the land itself – and how it still shapes the America we know today.

This fresh look at the epic history of the American West delves into the desperate struggle for the land itself – and how it still shapes the America we know today.

Kevin Costner

Clay Jenkinson

Yohuru Williams

Doris Kearns Goodwin

H.W. Brands

Elliott West